Sort by year:

ATK: Automatic Task-driven Keypoint Selection for Robust Policy Learning

Conference on Robot Learning (CoRL), 2025

Yunchu Zhang, Shubham Mittal, Zhengyu Zhang, Liyiming Ke, Siddhartha Srinivasa, Abhishek Gupta

Conference on Robot Learning (CoRL), 2025

Yunchu Zhang, Shubham Mittal, Zhengyu Zhang, Liyiming Ke, Siddhartha Srinivasa, Abhishek Gupta

Steering Your Diffusion Policy with Latent Space Reinforcement Learning

Conference on Robot Learning (CoRL), 2025

Best Paper Award Nomination

Andrew Wagenmaker*, Mitsuhiko Nakamoto*, Yunchu Zhang*, Seohong Park, Waleed Yagoub, Anusha Nagabandi, Abhishek Gupta*, Sergey Levine

Conference on Robot Learning (CoRL), 2025

Best Paper Award Nomination

Andrew Wagenmaker*, Mitsuhiko Nakamoto*, Yunchu Zhang*, Seohong Park, Waleed Yagoub, Anusha Nagabandi, Abhishek Gupta*, Sergey Levine

CCIL: Continuity-based Data Augmentation for Corrective Imitation Learning

International Conference on Learning Representations(ICLR), 2024

Liyiming Ke*,Yunchu Zhang*, Abhay Deshpande, Siddhartha Srinivasa, Abhishek Gupta

International Conference on Learning Representations(ICLR), 2024

Liyiming Ke*,Yunchu Zhang*, Abhay Deshpande, Siddhartha Srinivasa, Abhishek Gupta

Cherry picking with Reinforcement learning

Robotics: Science and Systems (RSS), 2023

Yunchu Zhang*, Liyiming Ke* , Abhay Deshpande, Abhishek Gupta, Siddhartha Srinivasa

Robotics: Science and Systems (RSS), 2023

Yunchu Zhang*, Liyiming Ke* , Abhay Deshpande, Abhishek Gupta, Siddhartha Srinivasa

- Proposed a system, CherryBot, for training deep RL agents for dynamic fine manipulation without rigid surface support.

- Demonstrated over multiple random seeds that, within 30 minutes of interaction in the real world, our proposal achieves 100% success rate on a exacting proxy task.

- Generalized on diverse evaluation scenarios including dynamic disturbance, randomized reset conditions, varied perception noise and object shapes.

Energy-based Models are Zero-Shot Planners for Compositional Scene Rearrangement

Robotics: Science and Systems (RSS), 2023

Nikolaos Gkanatsios*, Ayush Jain*, Zhou Xian, Yunchu Zhang, Katerina Fragkiadaki

Robotics: Science and Systems (RSS), 2023

Nikolaos Gkanatsios*, Ayush Jain*, Zhou Xian, Yunchu Zhang, Katerina Fragkiadaki

- Proposed a framework called IMAGGINE for robot instruction that maps language instructions to goal scene configurations of the relevant object and part entities, and their locations in the scene.

- Designed a semantic parser which maps language commands to compositions of language-conditioned energy-based models that generate the scene in a modular and compositional way.

- Modulated the pick and placement locations of a robotic gripper with predicted entities' locations and a transporter network that re-arranges the objects in the scene.

Planning with Spatial-Temporal Abstraction from Point Clouds for Deformable Object Manipulation

Conference on Robot Learning (CoRL), 2022

Xingyu Lin*, Carl Qi*, Yunchu Zhang, Zhiao Huang, Katerina Fragkiadaki, Yunzhu Li, Chuang Gan, David Held

Conference on Robot Learning (CoRL), 2022

Xingyu Lin*, Carl Qi*, Yunchu Zhang, Zhiao Huang, Katerina Fragkiadaki, Yunzhu Li, Chuang Gan, David Held

- Proposed a framework that PlAns with Spatial and Temporal Abstraction (PASTA) by learning a set of skill abstraction modules over a 3D set representation

- Composed a set of skills to solve complex tasks with more entities and longer-horizon than what was seen during training.

- Demonstrated a manipulation system in the real world that uses PASTA to plan with multiple tool-use skills to solve the challenging deformable object manipulation task.

Visually-Grounded Library of Behaviours for Manipulating Diverse Objects across Diverse Configurations and Views

Conference on Robot Learning (CoRL), 2021

Jingyun Yang*, Hsiao-Yu Fish Tung*, Yunchu Zhang*, Gaurav Pathak, Ashwini Pokle, Chris Atkeson, Katerina Fragkiadaki

Conference on Robot Learning (CoRL), 2021

Jingyun Yang*, Hsiao-Yu Fish Tung*, Yunchu Zhang*, Gaurav Pathak, Ashwini Pokle, Chris Atkeson, Katerina Fragkiadaki

- Built a behavior selector which conditions on the invariant object properties to select the behaviors that can successfully perform the desired tasks on the object in hand

- Generated and collected a library of behaviors each of which conditions on the variable object properties to predict the manipulation actions over time.

- Extracted semantically-rich and affordance-aware view-invariant 3D object feature representations through self-supervised geometry-aware 2D-to-3D neural networks.

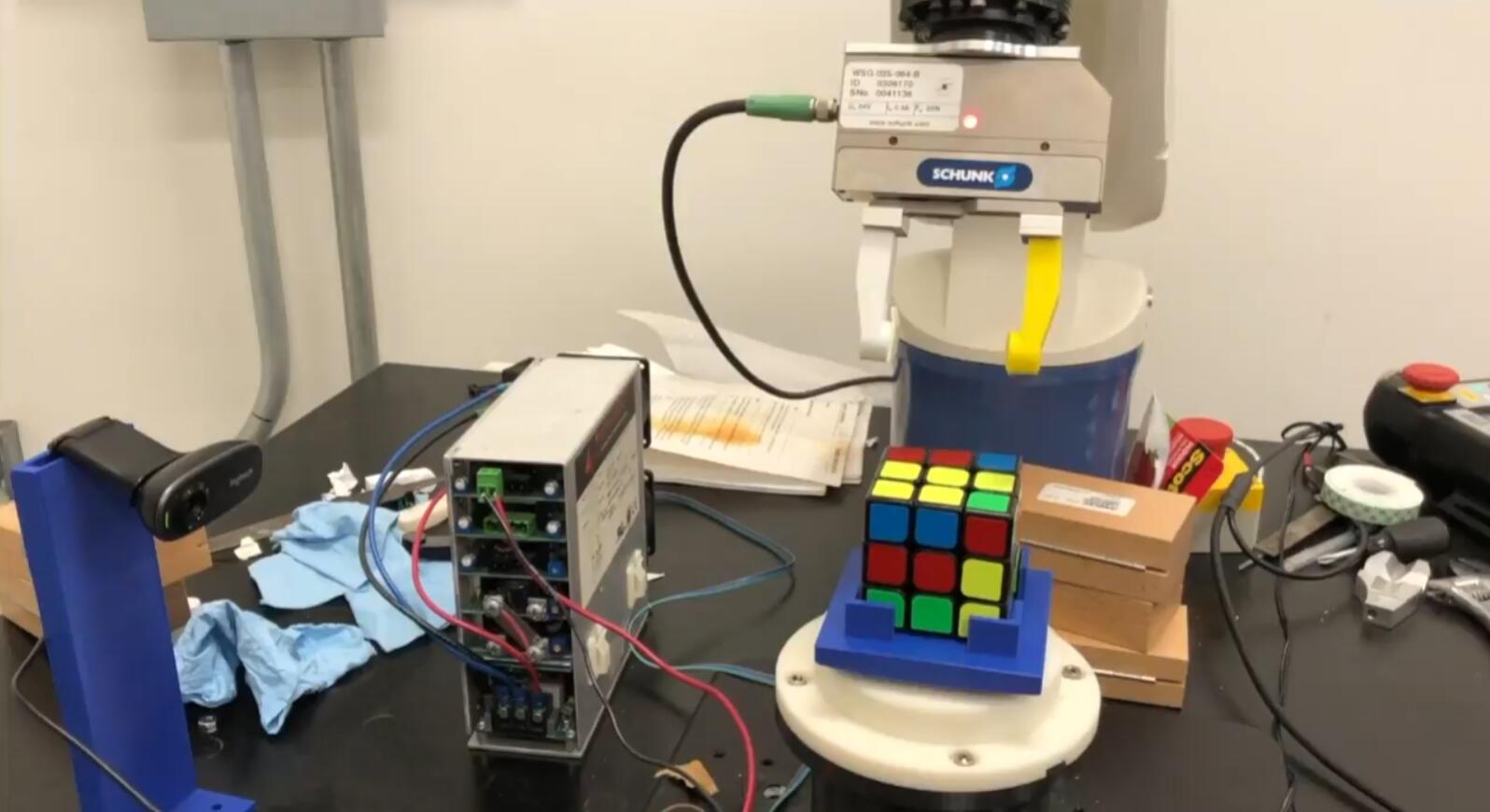

Apr.2018 — June 2018 Control for Robotics system: Solving Rubik’s Cube with robot arm and motor

Advisor: Veronica Santos, Associate Professor, Department of Mechanical Engineering , Director of Biomechatronics Lab in UCLA

Advisor: Veronica Santos, Associate Professor, Department of Mechanical Engineering , Director of Biomechatronics Lab in UCLA

- Detect randomly shuffled Rubik’s cube from RGB image and generate motion commands.

- Utilize inverse kinematic to make trajectory and position planning for robot arm.

- Utilized PID position control to rotate Rubik’s Cube and realized real-timeGripper’s force control to grasp Rubik’s Cube.